ARCHIVED CONTENT

You are viewing ARCHIVED CONTENT released online between 1 April 2010 and 24 August 2018 or content that has been selectively archived and is no longer active. Content in this archive is NOT UPDATED, and links may not function.By Ralph Losey

Scientific Experiments on Inconsistencies of Relevance Determinations in Large Scale Document Reviews

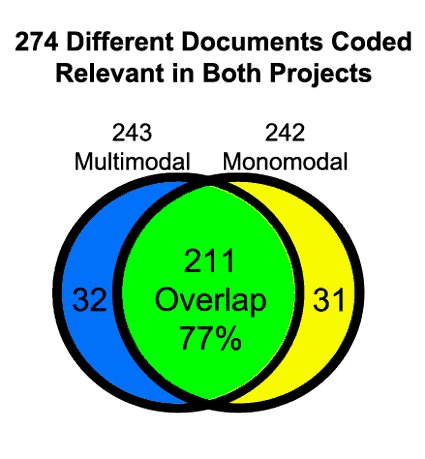

The base work in this area was done by Ellen M. Voorhees: Variations in Relevance Judgments and the Measurement of Retrieval Effectiveness, 36 Info. Processing & Mgmt 697 (2000). The second study of interest to lawyers on this subject came ten years later by Herbert L. Roitblat, Anne Kershaw and Patrick Oot, Document Categorization in Legal Electronic Discovery: Computer Classification vs. Manual Review, Journal of the American Society for Information Science and Technology, 61 (2010) (draft found at Clearwell Systems). The next study with significant new data on inconsistent relevance review determinations was by Maura Grossman and Gordon Cormack in 2012: Inconsistent Assessment of Responsiveness in E-Discovery: Difference of Opinion or Human Error?, 32 Pace L. Rev. 267 (2012); also see Technology-Assisted Review in E-Discovery Can Be More Effective and More Efficient Than Exhaustive Manual Review, XVII RICH. J.L. & TECH. 11 (2011). The fourth and last study on the subject with new data is my own review experiment done in 2012 and 2013. A Modest Contribution to the Science of Search: Report and Analysis of Inconsistent Classifications in Two Predictive Coding Reviews of 699,082 Enron Documents (2013)

Read the original article at: eDiscovery Team – Less Is More